About

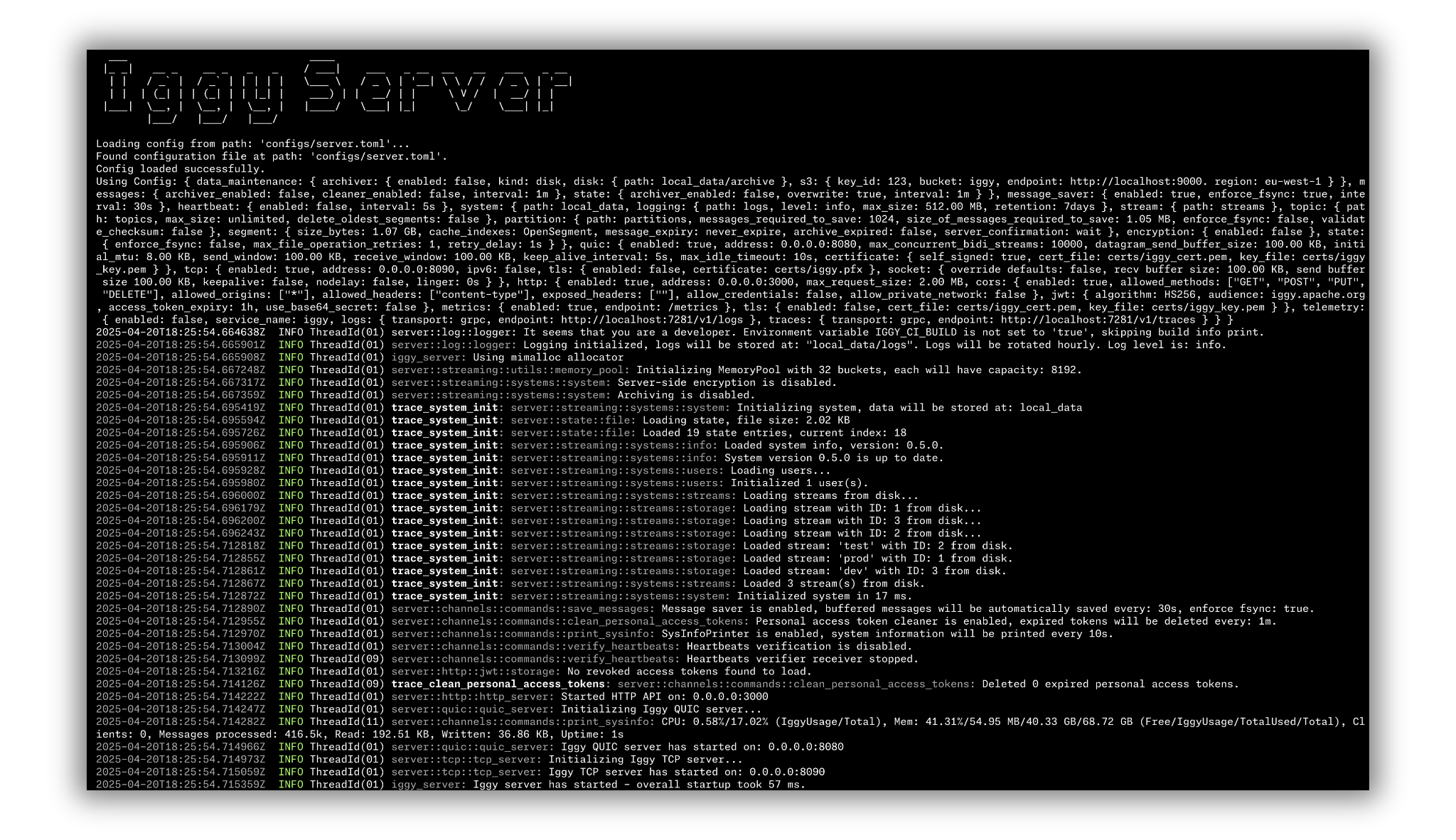

Iggy is a persistent message streaming platform written in Rust, supporting QUIC, TCP, WebSocket (custom binary specification) and HTTP (regular REST API) transport protocols, capable of processing millions of messages per second at ultra-low latency.

Iggy provides exceptionally high throughput and performance while utilizing minimal computing resources.

This is not yet another extension running on top of existing infrastructure, such as Kafka or SQL database.

Iggy is a persistent message streaming log built from the ground up using low-level I/O with thread-per-core shared nothing architecture, io_uring and compio for maximum speed and efficiency.

The name is an abbreviation for the Italian Greyhound - small yet extremely fast dogs, the best in their class. See the lovely Fabio & Cookie ❤️

Features

- Highly performant, persistent append-only log for message streaming

- Very high throughput for both writes and reads

- Low latency and predictable resource usage thanks to the Rust compiled language (no GC) and

io_uring. - User authentication and authorization with granular permissions and Personal Access Tokens (PAT)

- Support for multiple streams, topics and partitions

- Support for multiple transport protocols (QUIC, TCP, WebSocket, HTTP)

- Fully operational RESTful API which can be optionally enabled

- Available client SDK in multiple languages

- Thread per core shared nothing design together with

io_uringguarantee the best possible performance on modernLinuxsystems. - Works directly with binary data, avoiding enforced schema and serialization/deserialization overhead

- Custom zero-copy (de)serialization, which greatly improves the performance and reduces memory usage.

- Configurable server features (e.g. caching, segment size, data flush interval, transport protocols etc.)

- Server-side storage of consumer offsets

- Multiple ways of polling the messages:

- By offset (using the indexes)

- By timestamp (using the time indexes)

- First/Last N messages

- Next N messages for the specific consumer

- Possibility of auto committing the offset (e.g. to achieve at-most-once delivery)

- Consumer groups providing the message ordering and horizontal scaling across the connected clients

- Message expiry with auto deletion based on the configurable retention policy

- Additional features such as server side message deduplication

- Multi-tenant support via abstraction of streams which group topics

- TLS support for all transport protocols (TCP, QUIC, WebSocket, HTTPS)

- Connectors - sinks, sources and data transformations based on the custom Rust plugins

- Model Context Protocol - provide context to LLM with MCP server

- Optional server-side as well as client-side data encryption using AES-256-GCM

- Optional metadata support in the form of message headers

- Optional data backups and archiving to disk or S3 compatible cloud storage (e.g. AWS S3)

- Support for OpenTelemetry logs & traces + Prometheus metrics

- Built-in CLI to manage the streaming server installable via

cargo install iggy-cli - Built-in benchmarking app to test the performance

- Single binary deployment (no external dependencies)

- Running as a single node (clustering based on Viewstamped Replication will be implemented in the near future)

Supported languages SDK

C++ and Elixir SDKs are a work in progress.

CLI

The interactive CLI is implemented under the cli project, to provide the best developer experience. This is a great addition to the Web UI, especially for developers who prefer using the console tools.

Iggy CLI can be installed with cargo install iggy-cli and then simply accessed by typing iggy in your terminal.

Web UI

There's a dedicated Web UI for the server, which allows you to manage streams, topics, partitions, browsing the messages and so on. This is an ongoing effort to build a comprehensive dashboard for administrative purposes of the Iggy server. Check the Web UI in the /web directory.

The docker image is available, and can be fetched via docker pull apache/iggy-web-ui.

Connectors

Iggy provides a highly performant and modular runtime for statically typed, yet dynamically loaded connectors. You can ingest data from external sources and push the data to Iggy streams, or fetch data from Iggy streams and forward it to external systems. Create your own Rust plugins by simply implementing either the Source or Sink trait and build custom pipelines for the data processing.

The docker image is available, and can be fetched via docker pull apache/iggy-connect.

Model Context Protocol

The Model Context Protocol (MCP) is an open protocol that standardizes how applications provide context to LLMs. The Iggy MCP Server is an implementation of the MCP protocol for message streaming infrastructure. It can be used to provide context to LLMs in real-time, allowing for more accurate and relevant responses.

The docker image is available, and can be fetched via docker pull apache/iggy-mcp.

Docker

The official Apache Iggy images can be found on Docker Hub. Simply type docker pull apache/iggy to pull the image.

You can also find the images for all the different tooling such as Connectors, MCP Server etc. here.

Please note that the images tagged as latest are based on the official, stable releases, while the edge ones are updated directly from latest version of the master branch.

You can find the Dockerfile and docker-compose in the root of the repository. To build and start the server, run: docker compose up.

Additionally, you can run the CLI which is available in the running container, by executing: docker exec -it iggy-server /iggy.

Keep in mind that running the container on operating systems other than Linux, where the Docker is running in the VM, might result in the performance degradation.

Also, when running the container, make sure to include the additional capabilities, as you can find in docker-compose file:

cap_add:

- SYS_NICE

security_opt:

- seccomp:unconfined

ulimits:

memlock:

soft: -1

hard: -1

Or when running with docker run:

docker run --cap-add=SYS_NICE --security-opt seccomp=unconfined --ulimit memlock=-1:-1 apache/iggy:edge

Versioning

The official releases follow the regular semver (0.5.0) or have a latest tag applied (apache/iggy:latest).

We also publish edge/dev/nightly releases (e.g. 0.6.0-edge.1 or apache/iggy:edge), for both SDKs and the Docker images. These are typically compatible with the latest changes but are not guaranteed to be stable and, as the name suggests, are not recommended for production use.